Agents, Assistants, Automations: What Vendors Call Them vs What They Actually Do

Measure Time Expansion, Not Terminology

Christian J. Ward

Nov 17, 2025

When vendors demo their "AI agent," they're often showing you a workflow automation with better branding. Most of the agents I'm seeing in the market are really just automations—the repeated steps you would do often, now wrapped in AI terminology.

Fred should work in procurement.

That meme hits because it's true. Pull back the curtain on most "agentic" platforms and you'll find predefined workflows triggered by specific conditions.

They execute fixed logic, rather than making autonomous decisions. And there's nothing wrong with that, as long as you know what you're buying and what you're paying for.

The confusion comes from treating these three categories—agents, assistants, and automations—as interchangeable when they represent fundamentally different capabilities with different cost structures and risk profiles.

Like the first moment you hand your teenager the car keys, with autonomy comes additional risk.

Vendors call everything an agent because it sounds more advanced. But the terminology matters less than understanding what you're actually getting and whether it expands your team's capacity to do valuable work.

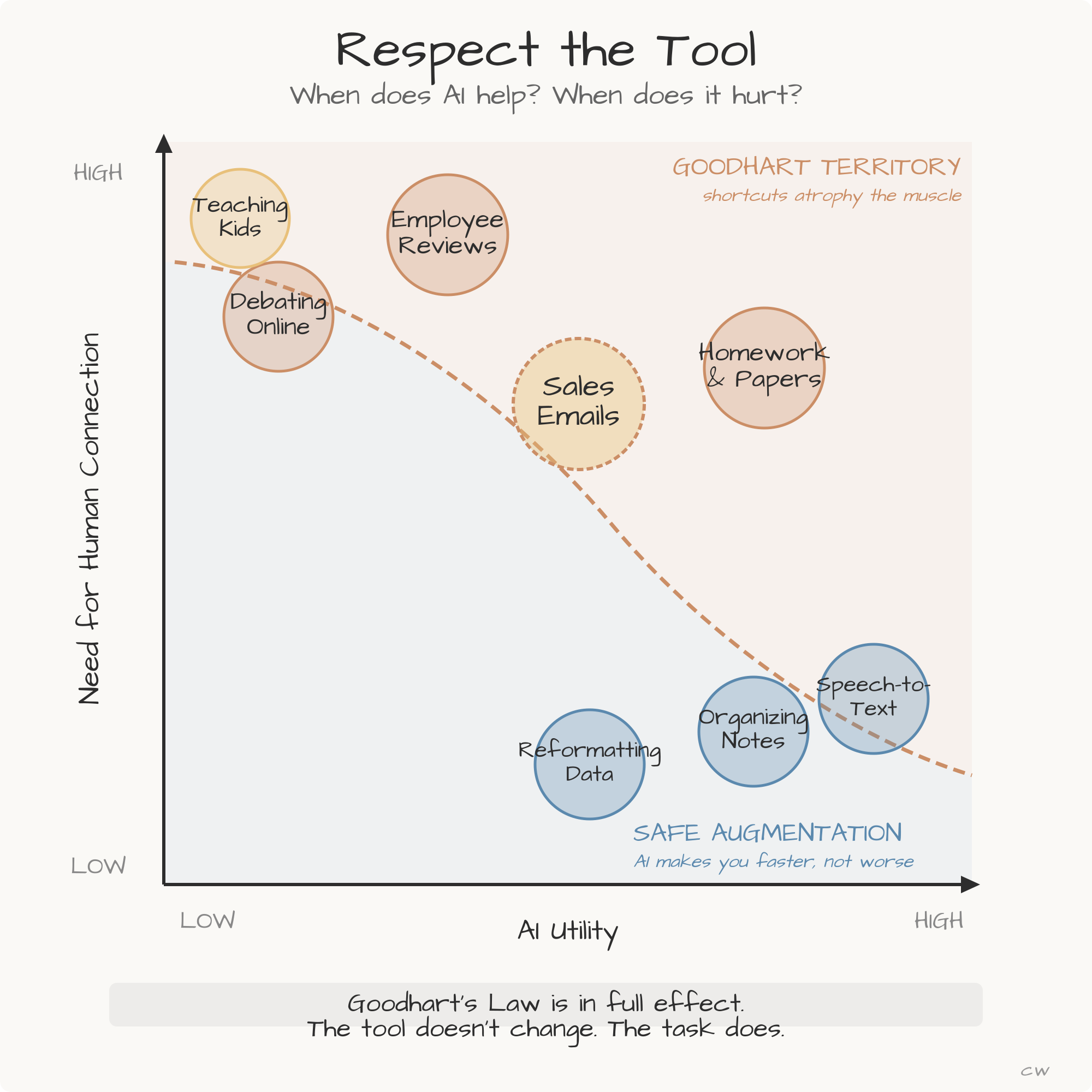

The AI Three-Layer Stack of Name Calling

People keep blending these terms, so it helps to put them in a simple stack.

This is how I think about the relationship between automations, assistants, and agents. It's not a perfect taxonomy because you can run agents without an assistant and you can layer automations under an agent or vice versa.

But as a framework for categorizing the interaction and setting expectations, it works.

Automations sit at the base because they handle the basic repeating motions we've relied on for a long time.

The assistant sits above that as the thing we actually talk to. It gathers context, listens for intent, and acts like the place where the real coordination happens.

Then you have agents under the assistant, each one locked into a tight capability. They focus on the work itself while the assistant keeps the bigger picture steady.

This structure matters.

It keeps tasks from spilling across boundaries, and it keeps people from expecting one layer to do a job that belongs to another. Most of the confusion people feel around AI comes from treating everything as one big blob instead of a set of roles that complement one another.

Be careful which Agent you choose. Both are dangerous.

Layer | Present Day | Near Future | Examples |

|---|---|---|---|

Automations | Simple triggers that move data, push alerts, and keep routine tasks moving. | More adaptive paths and easier setup, still predictable and contained. | Zapier, Make, IFTTT, basic HubSpot workflows |

AI Assistant | The conversation surface that gathers information, answers questions, and figures out what the user is trying to do. | A steady control tower that tracks state, routes work to agents, and keeps everything aligned. | ChatGPT, Claude, Gemini, Perplexity |

AI Agent | Narrow capability units that stay in their lane so their decisions stay focused. | More self-directed inside that lane, able to run longer tasks and hand results back cleanly. | Claude Code, Replit Agents, CrewAI |

The most important reasons to build this way come down to stratification. It mirrors the way we build org charts for a reason. Any time you expect someone to own a task, you want clear roles, boundaries, and responsibilities.

You want a layer above them that understands how their work connects with everyone else, and you want a way to check whether things are drifting off course.

AI needs the same structure. Without it, the system loses shape. And anyone who has pushed Claude Code out of planning mode knows how fast things can get away from you.

Give an agent too much freedom and it can sprint off, generating a mountain of output before you can even react. Agents are powerful, but they need supervision, limits, and context that only the assistant can provide.

That's why this three-layer view matters. It keeps the work understandable. It keeps compliance in view. It lets you see how everything fits so you can trust what the system is doing and catch the moments where it starts to drift.

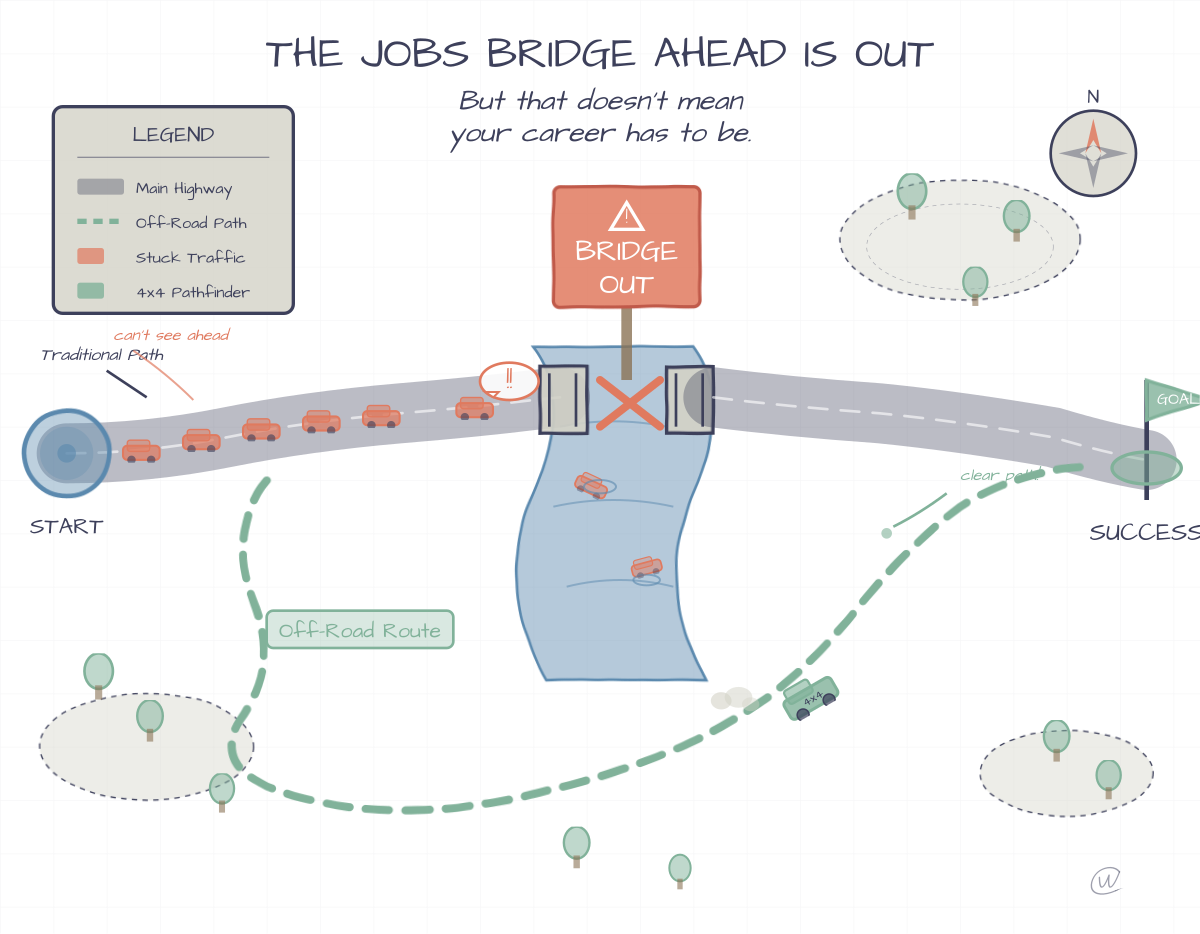

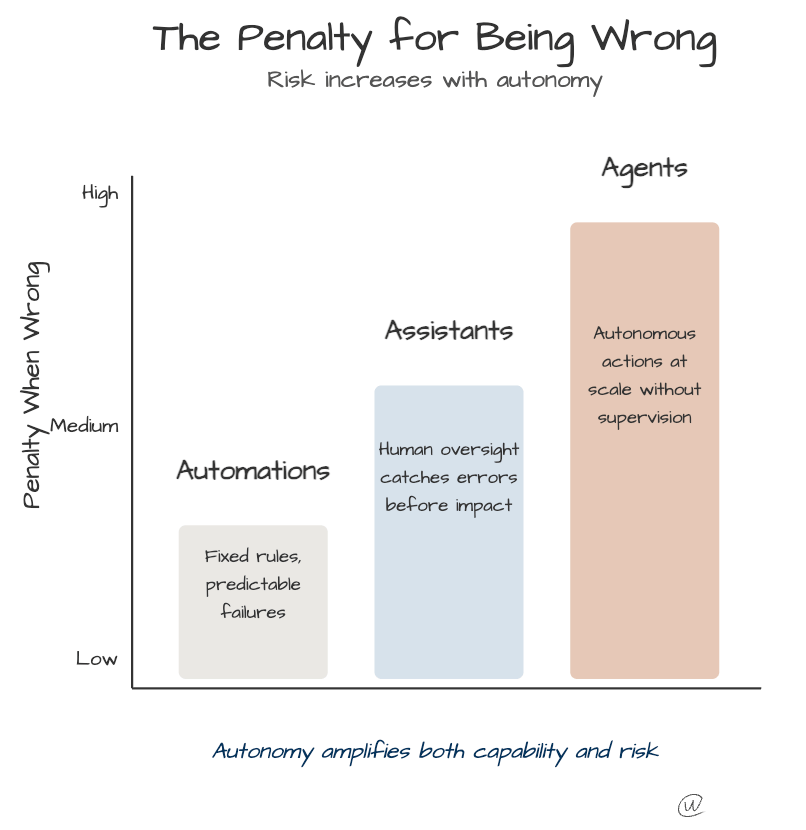

The Penalty for Being Wrong

When it comes to assistants, agents, and automations, the penalty for being wrong is one of the most important considerations to remember.

Teenager with Car Keys not shown (because they are up and to the right, way out of view).

For example, we had a competitor use an agent to change the primary category across a business they were powering for their Google profile, as well as other distribution sites.

They systematically ruined this company's digital visibility by doing this and they also triggered a re-verification (a major pain in the ass).

This sort of agentic problem is a massive penalty for being wrong.

While I do think we should all be identifying opportunities with agents, or in that case, I would classify that more as an assistant, we have to be very careful about what the penalty is if the agent acts and it's wrong, and how you can control that or monitor that. Right now, there are way too loosey goosey definitions, and it's causing a lot of issues.

As I explored in Agency Beats Intelligence in AI Development, agency only adds value after crossing a minimum intelligence threshold.

Below that threshold, increasing agency becomes problematic because you get high-agency, low-intelligence systems making confident, wrong decisions at scale.

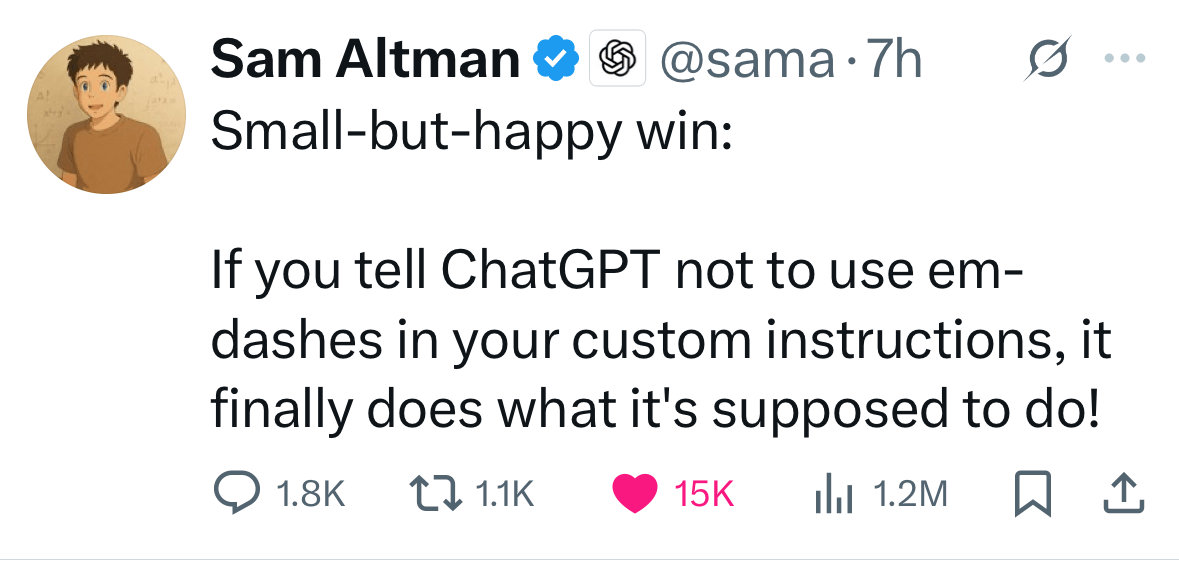

And you get too many em dashes…

I honestly miss the em dash.

Time Is the Only Real Asset

My old friend Rob Usey sent me the a16z article about why air conditioning is cheap but AC repair is expensive. (You should read the article… it’s excellent.)

The piece covers the interplay between Jevons’ Paradox and Baumol's Cost Disease.

Jevons’ Paradox observes that when efficiency improvements make something cheaper, we consume vastly more of it rather than using less.

Computing offers the textbook case. As transistor costs plummeted from dollars to fractions of a cent, demand surged across numerous new applications.

Baumol's Cost Disease describes how services that don't become more productive tend to become more expensive anyway.

William Baumol's original example focused on orchestras. Musicians faced financial difficulties not because they became less productive, but because their wages rose to match opportunities created elsewhere in the economy.

The article connects these through the example of AC repair. HVAC technicians now command $150 per hour for home repairs because they can earn that installing systems for data centers.

The work itself hasn't changed, but it must compete for skilled labor in the same market where AI has created productivity booms.

While we constantly try to convert things economically into currency for measurement, I believe time is the only real asset that can be measured and applied across various economic theories.

Efficiency and productivity are both tied to time usage and time expansion. However, every job that cannot be sped up, such as patching a wall or fixing the AC, proportionately increases in expense because those who have benefited from the currency boom desire to keep more of their time. And so they will pay more to the professions that cannot be sped up or made more efficient from a time perspective.

This connects to agents versus automations because the economic value depends entirely on time expansion. An "agent" that burns $500 in processing to save you 30 minutes hasn't created economic value. An automation that runs a 15-minute workflow in 90 seconds while consuming $2 in compute represents genuine time expansion.

Organizations chasing autonomy without measuring time ROI will discover they've built expensive systems that don't actually expand anyone's capacity to do more valuable work. The terminology matters less than the time equation.

What This Means for How We Build

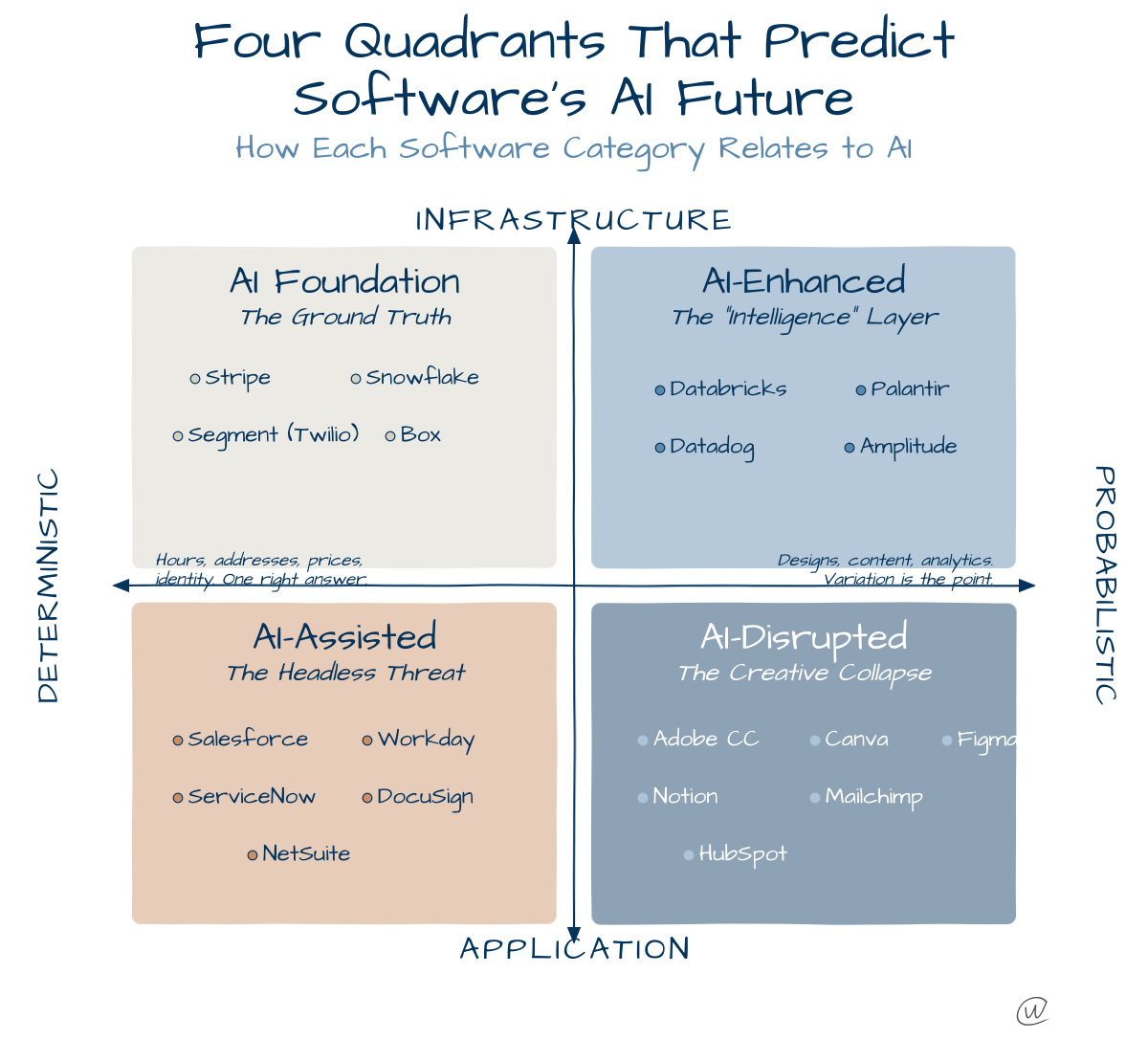

Everything being built right now is mixing and matching different capabilities and explanations of their tools. I get it, but it would be better to think clearly about the differences of AI across the agent, assistant, and automation framework.

HubSpot's AI work offers a useful example.

Nicholas Holland explained that "agents move from completing tasks to doing entire jobs, eventually combining jobs into larger bodies of work."

Their customer agents resolved issues 39% faster and prospecting agents booked 11,000 meetings in Q1 alone. But look closer at what these systems actually do. They execute predefined workflows triggered by specific conditions.

When a vendor demonstrates an "AI agent," ask specific questions about autonomy.

Does it set its own goals or follow predefined workflows? Can it adapt its approach based on changing conditions without human reprogramming, or does it execute fixed logic?

Everything Is Moving in the Right Direction

Gemini 3 is likely to be released this week.

I expect it to blow the doors off most models.

This leads me to feel that everything is moving in the right direction right now.

Everything is a little fast and reckless, and still progressing.

In 1968, elementary school teachers picketed against the use of calculators in grade schools, worried that students wouldn't learn math concepts if they could simply punch numbers into a device.

We're in a similar transition with AI. What we end up with is this weird hybrid between assistance, agents, and automations, and I think that's exactly where we should be.

We want an AI that is smart enough to be told what to do, to go do it reasonably, and to not make mistakes along the way. The reality is we're not there yet, and pretending we are by calling automations "agents" just creates misaligned expectations and budgets.

That's the practical path forward regardless of what vendors call their tools.