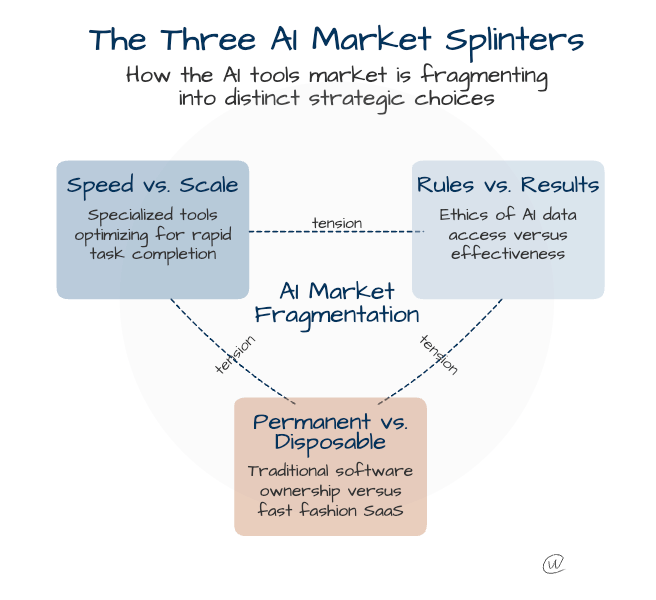

AI Market Shows 3 Critical Splinters Now

Why Speed vs. Scale, Rules vs. Results, and Permanent vs. Disposable choices will determine your AI strategy success

Christian J. Ward

Aug 7, 2025

Some major announcements and shifts are happening. OpenAI just launched gpt-oss, their open-source model similar to o3-mini and 4o-mini. Google launched a world engine called Genie, and we are pretty sure GPT-5 is launching in a few hours.

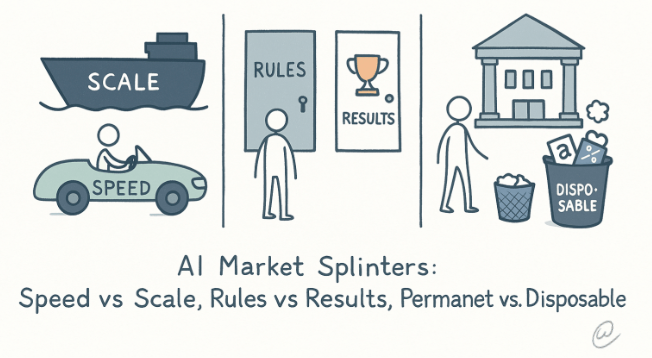

That said, I see three major “Splinters” forming.

Speed vs. Scale (specialized tools beating ChatGPT at specific tasks)

Rules vs. Results (the ethics of how AI accesses data)

Permanent vs. Disposable (the concept of throwaway software).

Each forces businesses to rethink at least portions of their AI strategy.

So many Posts/Discussions now fall along these Three Splinters.

Splinter 1: Speed vs. Scale

Peter Yang captured the first splinter perfectly when he tweeted about still preferring Perplexity AI for quick questions. His recent queries ranged from restaurant pricing trends to stock market movements to graphic novel recommendations. The key insight wasn't what he asked but why he chose Perplexity over ChatGPT: speed.

Speed is a major splinter.

This preference highlights an emerging pattern where users choose specific AI tools for particular tasks rather than defaulting to the market leader. ChatGPT owns the overall market with 700 million weekly active users, according to Nick Turley's numbers. That's 8.6% of the world's population using one AI tool every week.

I bet it’s already closer to 800M Users.

Perplexity finds its audience by optimizing for rapid information retrieval. Claude focuses on nuanced analysis. Grok4's Imagine takes on visual creation. Each carves out territory by refusing to be mediocre at everything.

Splinter 2: Rules vs. Results

The second splinter involves how these tools operate behind the scenes. Cloudflare recently accused Perplexity of using undeclared crawlers to bypass website restrictions, essentially scraping content that publishers explicitly blocked from AI access.

Cat and Mouse… just like old times.

The reality is that if Cloudflare blocks Perplexity without letting users opt in, they're removing one of the better AI platforms from consumer access. With Perplexity's new Comet browser, blocking means cutting off a portion of the internet. If Cloudflare tries this with ChatGPT, businesses won't stand for it. They'll force Cloudflare to open their content as the default.

The web remains angry about how AI tools access data, but that anger won't stop progress. Companies need AI that works, not AI that follows every publisher's preference. This tension between ethics and effectiveness leads directly to the most disruptive splinter.

Splinter 3: Permanent vs. Disposable

The third splinter might prove most disruptive. Sam Altman cryptically tweeted about entering "the fast fashion era of SaaS very soon," suggesting AI tools might become as temporary and trend-driven as clothing lines.

Fast fashion is a horribly accurate term.

Fast fashion means rapid production, constant newness, planned obsolescence, and price accessibility. For AI software, expect tools launching weekly, features changing monthly, and entire platforms becoming obsolete quarterly. Your procurement process that takes six months? Dead on arrival.

Ethan Mollick's observation about "important people" predominantly using GPT-4o highlights an interesting paradox. Elite users stick with OpenAI while preaching flexibility to everyone else. They've found their "good enough" and stopped looking.

Use the Premium Models, People!

Scale (Company) vs. Speed (Personal) Usage is My Current Focus

Your AI Strategy Needs These 3 Adjustments

Build human buffer zones between AI outputs and business decisions. When I say abstraction layers, I don't mean middleware or APIs. I mean humans who review AI outputs before they affect your business. Too many teams over-automate with tools like N8N, copying and pasting automations without checkpoints. Export every AI-based solution into a table for later analysis. Create human access points where someone validates results before implementation.

Review your AI stack every 30 to 90 days. This space moves too fast for annual reviews. Identify individuals within your organization who are eager to explore new tools, rather than being tied to existing solutions.

Create sandbox environments for tool testing. Build a folder with 10 sample customer emails, 5 product descriptions, and three financial reports. Run each tool through identical tasks. Compare results side-by-side. Define what improved output looks like before you test. Never experiment with corporate or personal data until you've validated the tool's value and security.

But what does this constant experimentation cost your organization?

The Real Cost of AI Tool Sprawl

Let's talk money. AI development tools cost almost nothing to start, but hundreds of thousands in refactoring time if you're rebuilding systems. Writing tools run $50 to $100 monthly per platform. The real cost isn't subscriptions but transition time.

When I switched from Claude Opus to ChatGPT for refactoring my own tools, it took eight hours over a weekend. That time investment paid off immediately. Tasks that took an hour now take 30 minutes. Most tool switches follow this pattern: minimal upfront cost, moderate time investment, significant productivity gains.

The "Good Enough" Framework determines when to switch. If your current tool handles 80% of use cases adequately, switching for marginal improvements wastes resources. But when you find gaps in core functionality or discover a tool that's 10x better at critical tasks, eight hours of switching beats months of inefficiency.

Smart organizations budget for experimentation. Allocate $500 to $1000 monthly for tool trials. More importantly, allocate time. Give your early adopters four hours weekly to test new platforms. Their discoveries will guide enterprise-wide decisions.

Yet even with budget and time, some organizations shouldn't attempt this approach.

When Tool Proliferation Fails

Not every organization should chase the newest AI platform. Regulated industries can't switch tools monthly when each change requires a compliance review. Healthcare, finance, and government need stability over innovation.

Small companies lack bandwidth for constant evaluation. With five employees, nobody has time to test every new AI tool. Better to master one platform deeply than juggle ten superficially.

Security requirements create real barriers. Each tool means another vendor assessment, another data processing agreement, another potential breach vector. If your security team takes three months to approve new vendors, you can't participate in fast fashion AI.

The human element matters most. Teams need consistency to build expertise. If you switch tools faster than people can learn them, productivity plummets. Watch for signs of change exhaustion, such as declining adoption rates, increased errors, and requests to "just pick something and stick with it."

Understanding these limitations helps frame what winning looks like in this new landscape.

Understanding the New AI Market

These three splinters, Speed vs. Scale, Rules vs. Results, and Permanent vs. Disposable, create a unified reality. Constant tool switching is now the norm and is unlikely to slow down.

ChatGPT maintains mass market leadership through brand recognition while alternatives compete through narrow excellence. But market position matters less than your switching capability.

Success requires accepting tools as temporary. Build processes that outlive any platform. Document what works universally, not tool-specific tricks. Celebrate deprecation as proof you're moving fast enough.

The winners in this splintering market share specific traits. They maintain dedicated innovation teams who live in the tools, not just read about them. They measure actual outcomes, not feature lists. They build knowledge bases capturing what works across platforms.

Do not become wed to any software. You will likely throw it out within months.

Instead, focus on solutions and piece together whatever software gets you there. In the future, AI will build custom software on demand for each problem. For now, take a consultative approach to every challenge, define the outcome, and then find the tools.

The only sustainable advantage is to adapt faster than your competition.