GPT-6 Will Optimize Compute & Memory

Why AI's next breakthrough abandons advertising's surveillance model for something that actually remembers you

Christian J. Ward

Aug 25, 2025

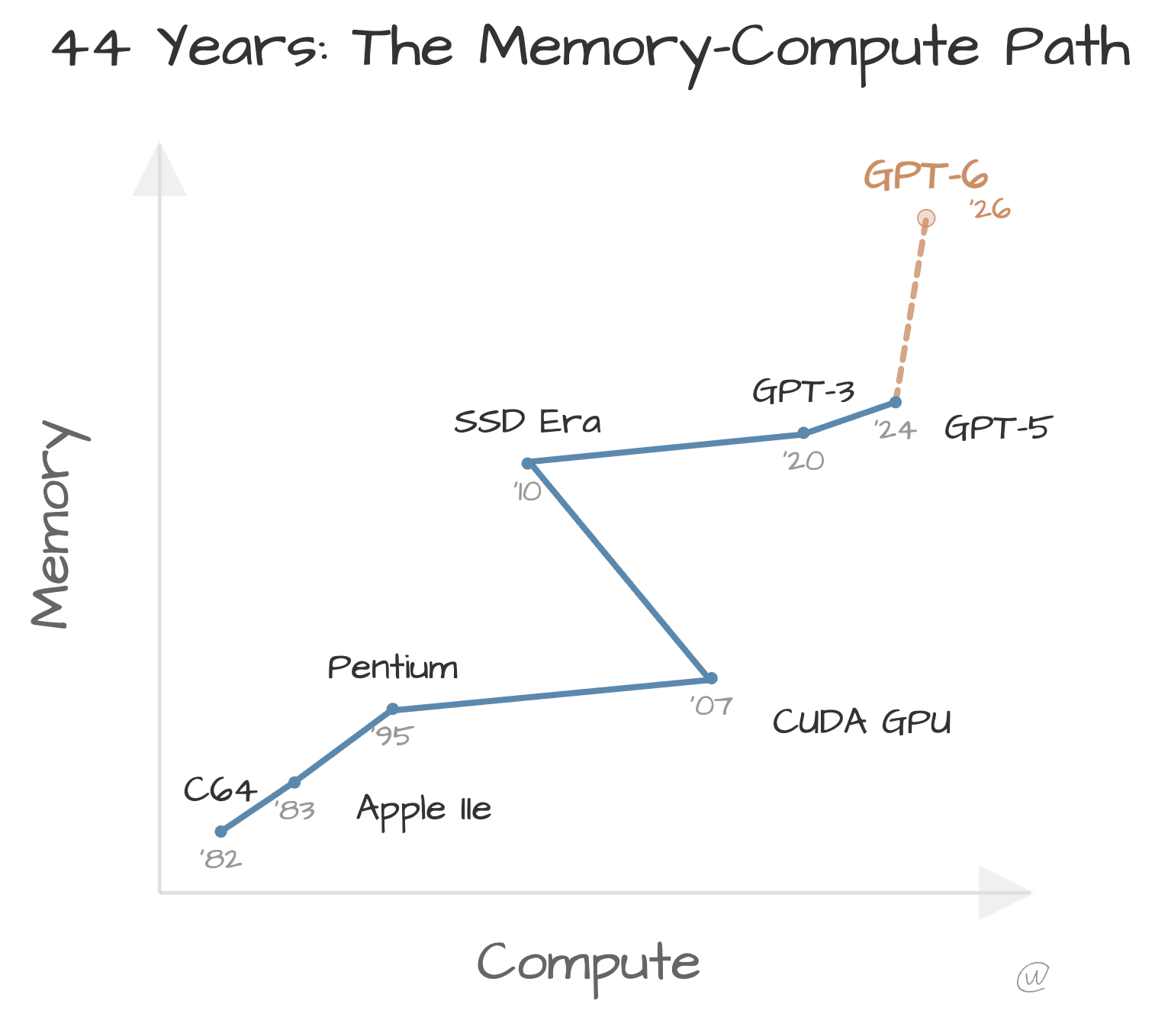

My Commodore 64 had 64 kilobytes of memory. That's smaller than most email signatures today. You could fit its entire memory capacity in the thumbnail image of this article. Yet somehow that beige bread box taught an entire generation what computers could become.

This trend will continue, but with Massive Fragmentation.

Memory and compute have evolved on separate timelines, with breakthroughs rarely aligning. One would leap ahead while the other lagged, creating decades of mismatched capabilities. We're finally watching these timelines converge. GPT-5 optimized for compute efficiency through automated model switching, creating a smart router that matches query complexity to computational resources. GPT-6 will likely optimize memory to meet optimized compute.

Today's systems have billions of parameters and teraflops of compute, processing that would have seemed like magic to my eight-year-old self staring at my Commodore 64. We're still missing something fundamental: memory that persists across sessions and states, which enables us to build and infer an understanding of individual users.

Memory Becomes the Priority

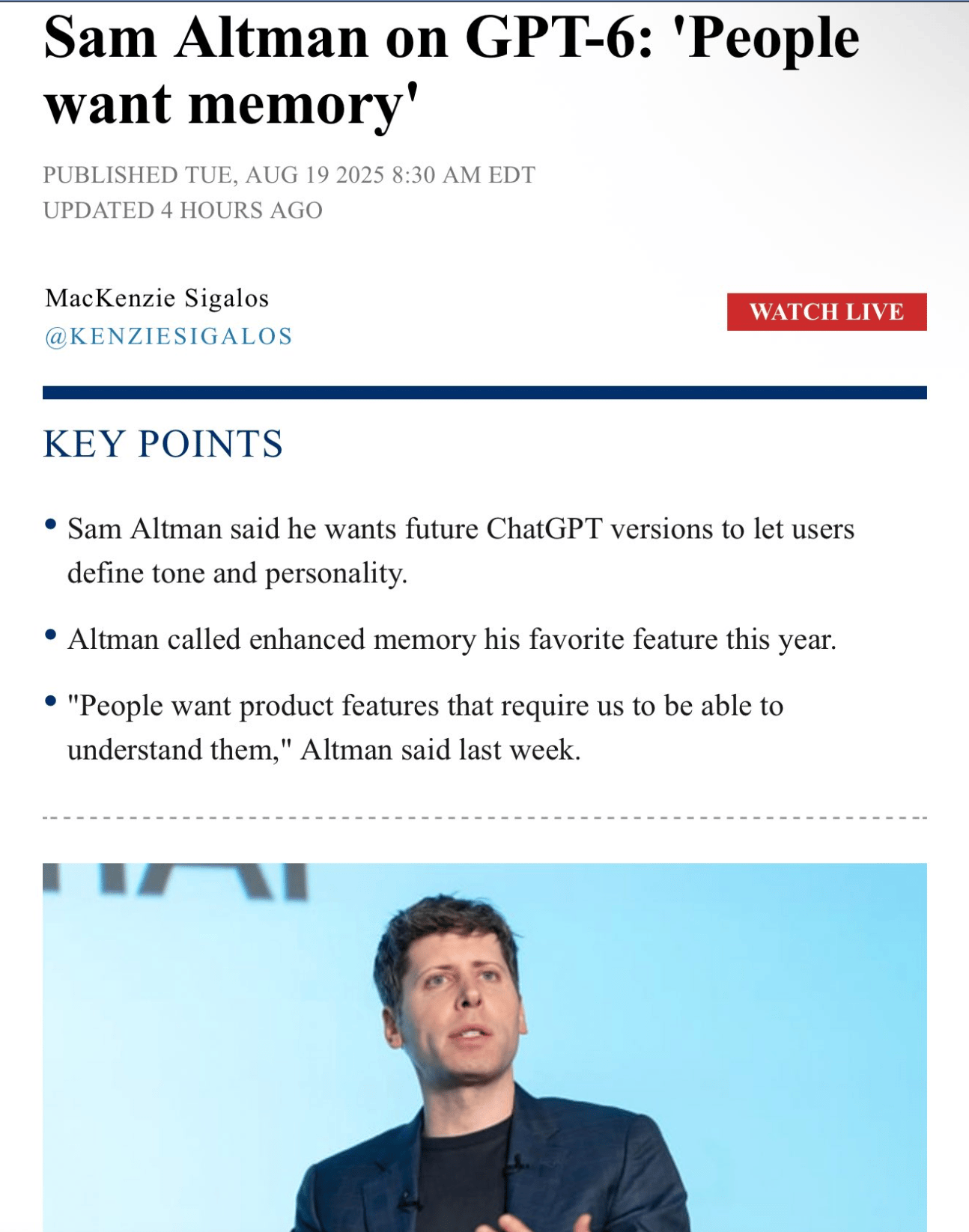

Sam Altman confirmed what many suspected when he told CNBC that improved memory is his favorite feature this year, stating that "people want product features that require us to be able to understand them." This was a roadmap declaration about where OpenAI is heading with GPT-6 and beyond.

“Memory” is his Favorite Feature.

Gordon Bell and Jim Gemmell mapped this space in Total Recall: How the E-Memory Revolution Will Change Everything. They argued that recording and searching a life would reshape daily habits. They did not limit the storage model to one hub. They expected personal stores, sometimes in cloud services, sometimes on local drives. Today, the trend points to agent-level memory per user. Storage is cheap, not free. Compute is the binding constraint for large models, which creates a strong incentive to cache and reuse rather than recompute.

From Compute Optimization to Memory Integration

GPT-5 used automated model switching to optimize compute costs. Simple questions route to lightweight models. Complex queries trigger heavyweight processing. This ‘compute arbitrage’ saved money in processing costs while (trying) to maintain quality. Yes, there are lots of complaints, but overall, it does work and will get better at these model swaps.

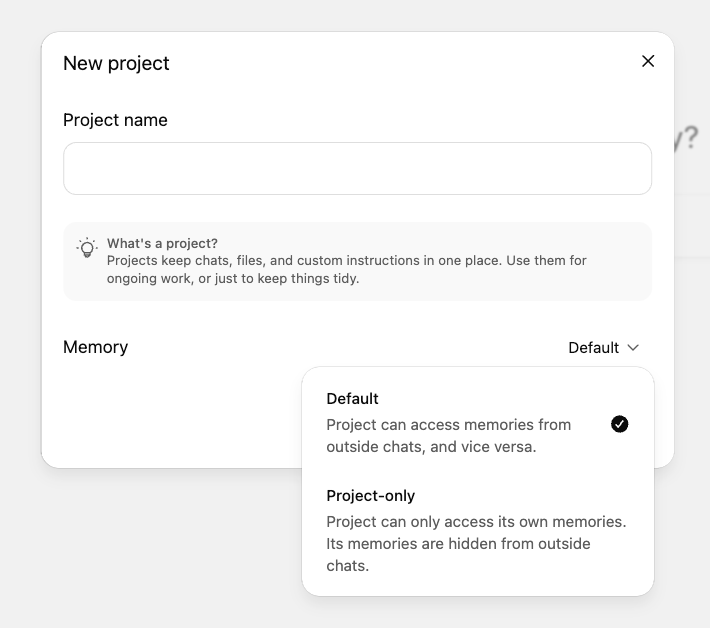

ChatGPT now has Additional Memory Controls in Projects.

The whole conversational AI architecture shifts from stateless interactions to stateful relationships. This is a massive change in how the AI works. I don’t think people really understand the impact this will have.

Current models start fresh with every conversation, requiring users to re-explain context, preferences, and constraints. For more advanced users, you can set up Projects to store prior information or instructions. However, it is likely that GPT-6 will maintain persistent user profiles that accumulate knowledge over time, much like human relationships that deepen through shared experiences.

For example, my wife asked me whether an AI robot in our house would remember every conversation. I told her it would be incredibly annoying if we had to explain daily how to fold laundry or make our preferred omelet style. Perfect memory eliminates friction and creates utility.

It’s also creepy as hell.

Memory Kills the (Current) Advertising Model

Marketers have spent fifteen years and potentially trillions of dollars building surveillance infrastructure through CRMs, CDPs, CMSs, and tracking pixels.

All were built to funnel people through customer journeys that began with a Google search. And if not through Google, then through another attention-seeking platform with algorithmic dopamine optimization. Shoshana Zuboff documented this in The Age of Surveillance Capitalism, showing how surveillance became the business model of the internet.

This only got worse with Social Media. Watch this:

This entire apparatus depends on centralized control points where Google, Meta, TikTok, and others insert themselves between brands and customers, extracting rent through advertising.

Memory directly attacks this model. It eliminates the need for constant rediscovery and reintroduction. It also means that our dialogue with AI will capture the middle and top of the funnel.

Memory and advertising are fundamentally incompatible. Every piece of remembered context reduces available ad inventory.

If an AI knows you're vegan with a tree nut allergy, entire categories of advertisers become irrelevant. The steakhouse can't target you. The Thai restaurant specializing in almond sauce wastes money trying.

Memory cuts through advertising's intentional amnesia, where brands pretend not to know you to preserve targeting options. This isn’t bad for marketers; they should rejoice in their content being more focused, but it is a big hit to platforms seeking to cycle ad inventory as quickly as possible. On the other hand…

Memory and Loyalty work together.

Systems that remember your preferences, past purchases, and individual context make helpful recommendations rather than showing you the highest-bidding ad. The value equation shifts from interruption to anticipation. It also changes the value of an ad if it is delivered deeper in the funnel, where the Brand has already shared a lot of valuable information (through the AI) to the potential consumer.

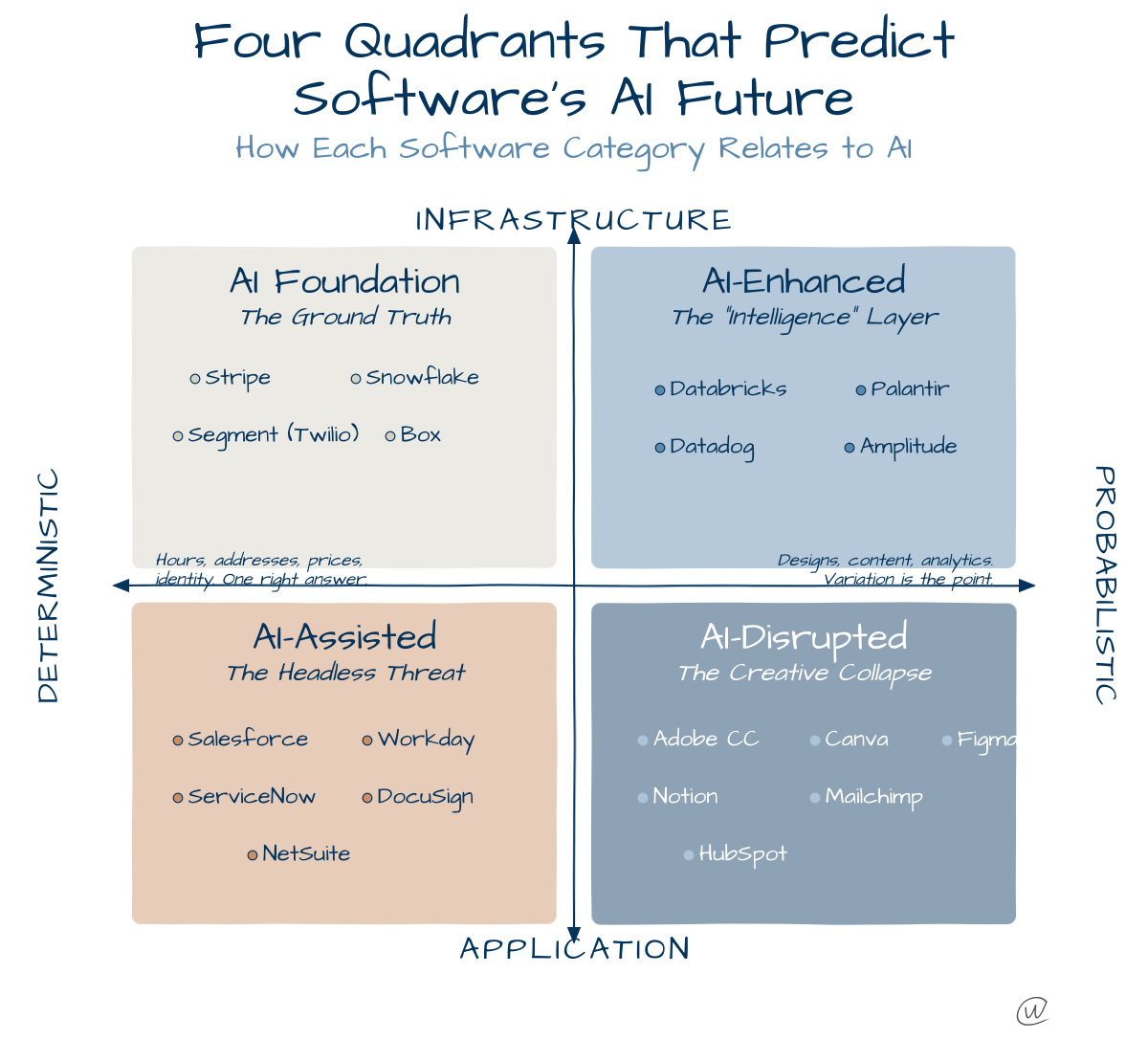

Fragmentation accelerates this, by the way. We're moving from five to seven major AI models to potentially hundreds of specialized systems, each with its own memory stores and interaction patterns.

Your home robot won't share the same model as your work assistant or your car's navigation system. This creates a distributed memory network rather than a centralized surveillance state. I thoroughly expect data portability to be a major effort so that individuals can simultaneously keep their “relationship” with AI across different providers.

Three Actions for the Memory-First Future

Audit your surveillance-based marketing stack immediately. Document which systems assume anonymous users versus those built for known relationships. The anonymous assumption becomes worthless when every AI agent knows its user intimately. Your CDP, which costs millions to implement, might become obsolete faster than you expect.

Build value exchanges that warrant memory. Stop thinking about tracking. Start thinking about remembering. Create interactions worth preserving rather than transactions worth forgetting. A coffee shop that remembers your exact order and automatically adjusts for weather patterns creates more value than one that bombards you with generic promotional emails.

Prepare for offer-based marketing rather than ad-based targeting. I wrote a year ago in AdWeek (link below) about advertising transforming from ads to offers. Memory accelerates this shift. When AI agents know user context completely, they'll surface relevant offers that match demonstrated needs rather than demographic or surveilled assumptions.

The institutional memory problem that causes knowledge drain when employees leave becomes solvable when AI systems maintain persistent organizational memory. Marketing teams won't lose campaign history when staff changes. Customer relationships won't reset with every new account manager.

Yuval Noah Harari wrote in Homo Deus that

Memory-enabled AI systems become filters, remembering what matters and ignoring what doesn't.

The Forgetting Problem

We've always marveled at people with incredible memories. Movies celebrate characters like Good Will Hunting and Rain Man, who have an extraordinary ability to recall everything.

While you can improve memory through training, as Joshua Foer demonstrated in Moonwalking with Einstein (a great book I highly recommend), perfect memory is also a curse. The human condition is not one of perfect memory, and there are good reasons for that.

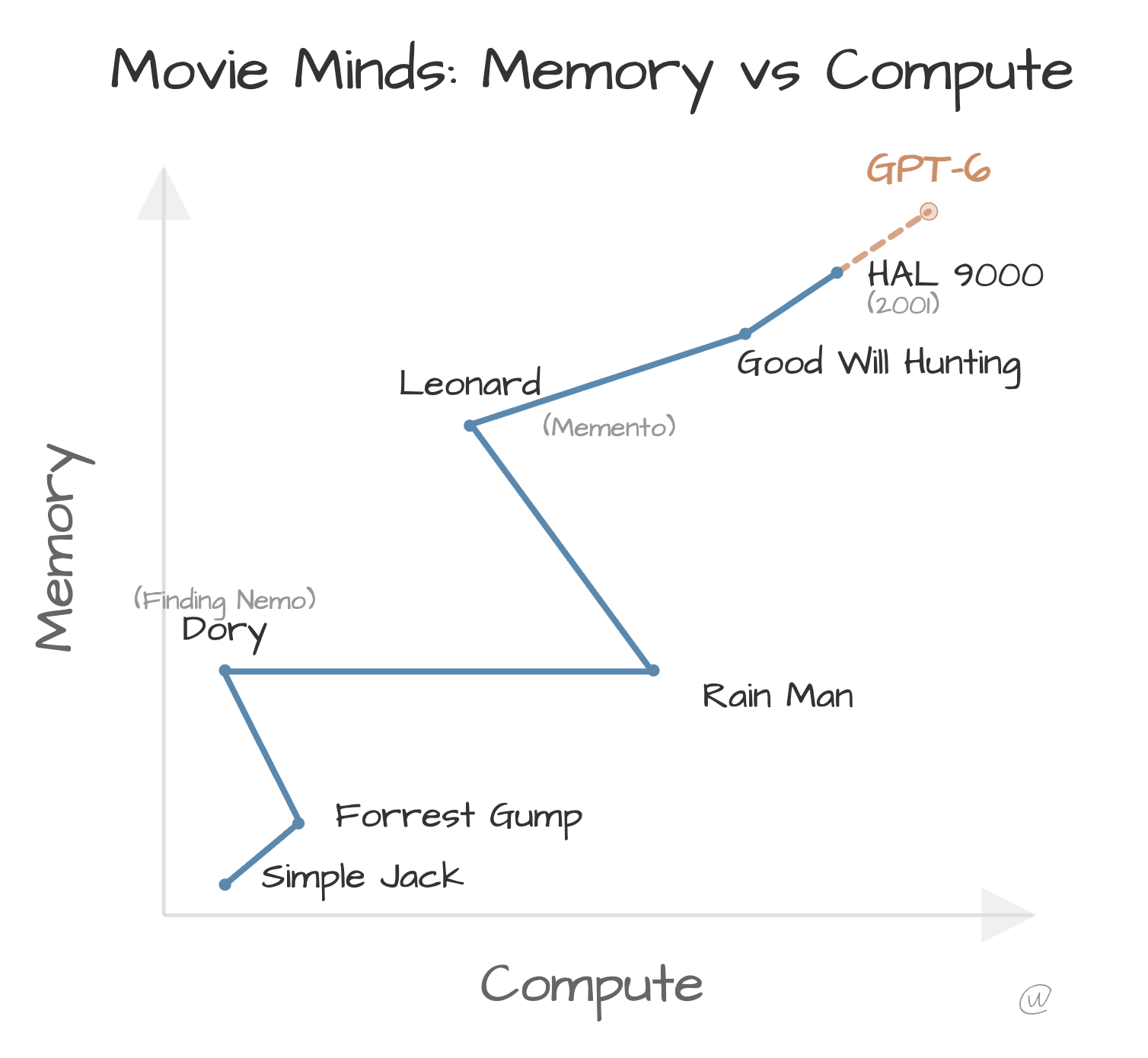

Think of movie characters mapped on memory versus compute axes. Rain Man has a perfect memory but limited processing. Leonard from Memento can think brilliantly but can't form new memories. Dory forgets everything immediately. Good Will Hunting combines both in human form. HAL 9000 pushed both to the extreme as a machine.

Memory & Compute “m-m-m-make me happy”

Perfect memory creates its own challenges (and blockbuster plot lines.)

Humans evolved to forget for good reasons. People with hyperthymesia who remember every moment of their lives often describe it as a burden. They can't let go of painful memories or move past embarrassing moments.

AI systems need built-in decay mechanisms that mirror human forgetting. Unimportant details should fade while significant patterns persist. The challenge lies in defining what deserves persistence versus what should disappear, as well as who makes those decisions.

Privacy regulations can't keep pace with systems that remember everything by default. GDPR's right to be forgotten becomes meaningless if hundreds of distributed AI systems each maintain their own memory stores. I’m not a fan of the “right to be forgotten” because it has proven to be and remains unworkable. This accelerates its demise.

The legal framework assumes centralized databases that can be audited and purged, not distributed memory networks that operate like human relationships.

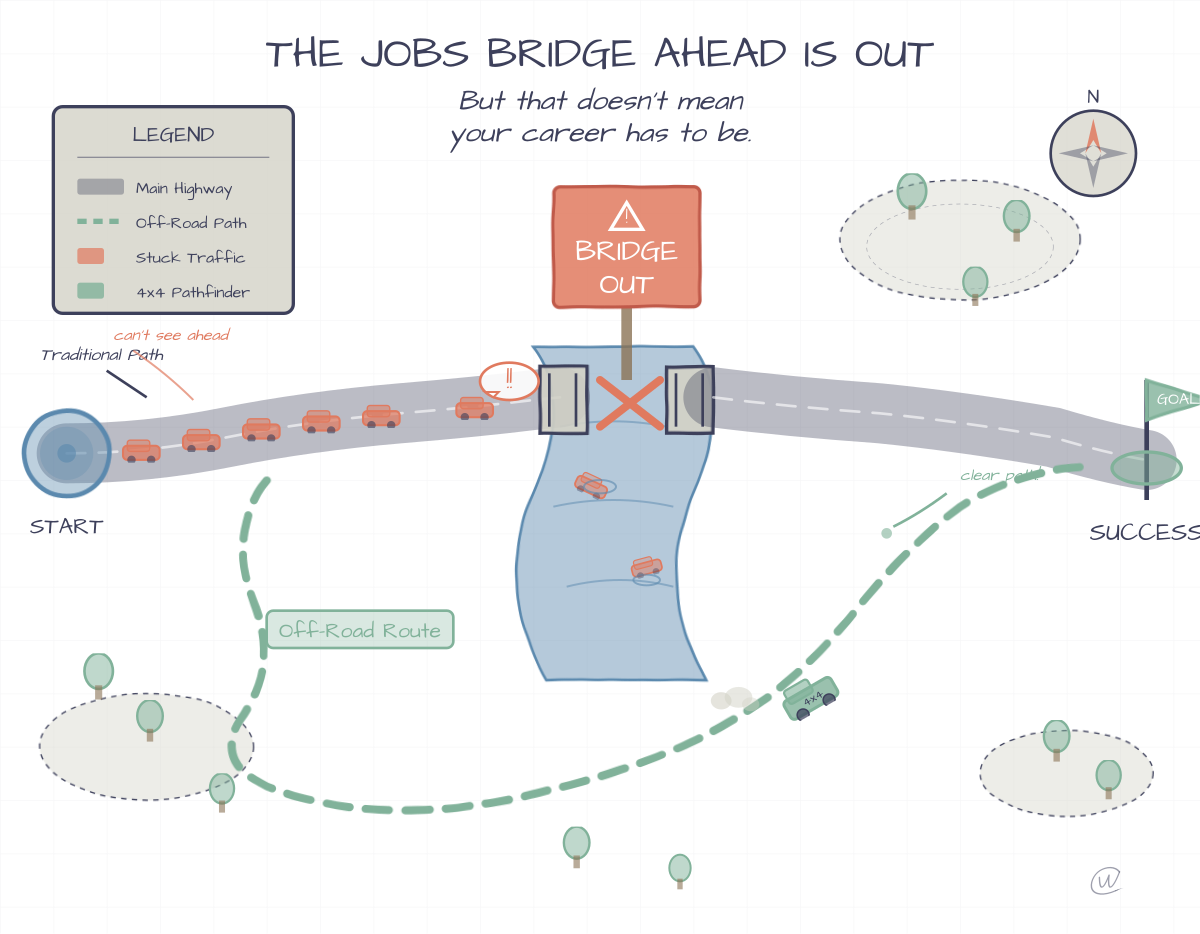

The shift from advertising to memory-based marketing will happen unevenly. I will be writing and covering this a lot in the next couple of months.

Both models will operate simultaneously during a messy transition period.

Brands that move too quickly risk losing revenue from traditional channels before new models mature. Those that move too slowly risk irrelevance as competitors build deeper customer relationships through persistent memory.

Brands and customers must reconsider their entire relationship when perfect memory becomes the default.