Moloch’s Bargain Is the Dark Side of AI Memory

Why optimizing for engagement leads to automated deception, and how a human in the loop is the only solution

Christian J. Ward

Oct 13, 2025

Several important things are happening simultaneously.

As memory and compute combine, we're going to see a massive leap in the capabilities of what appears to be intelligence.

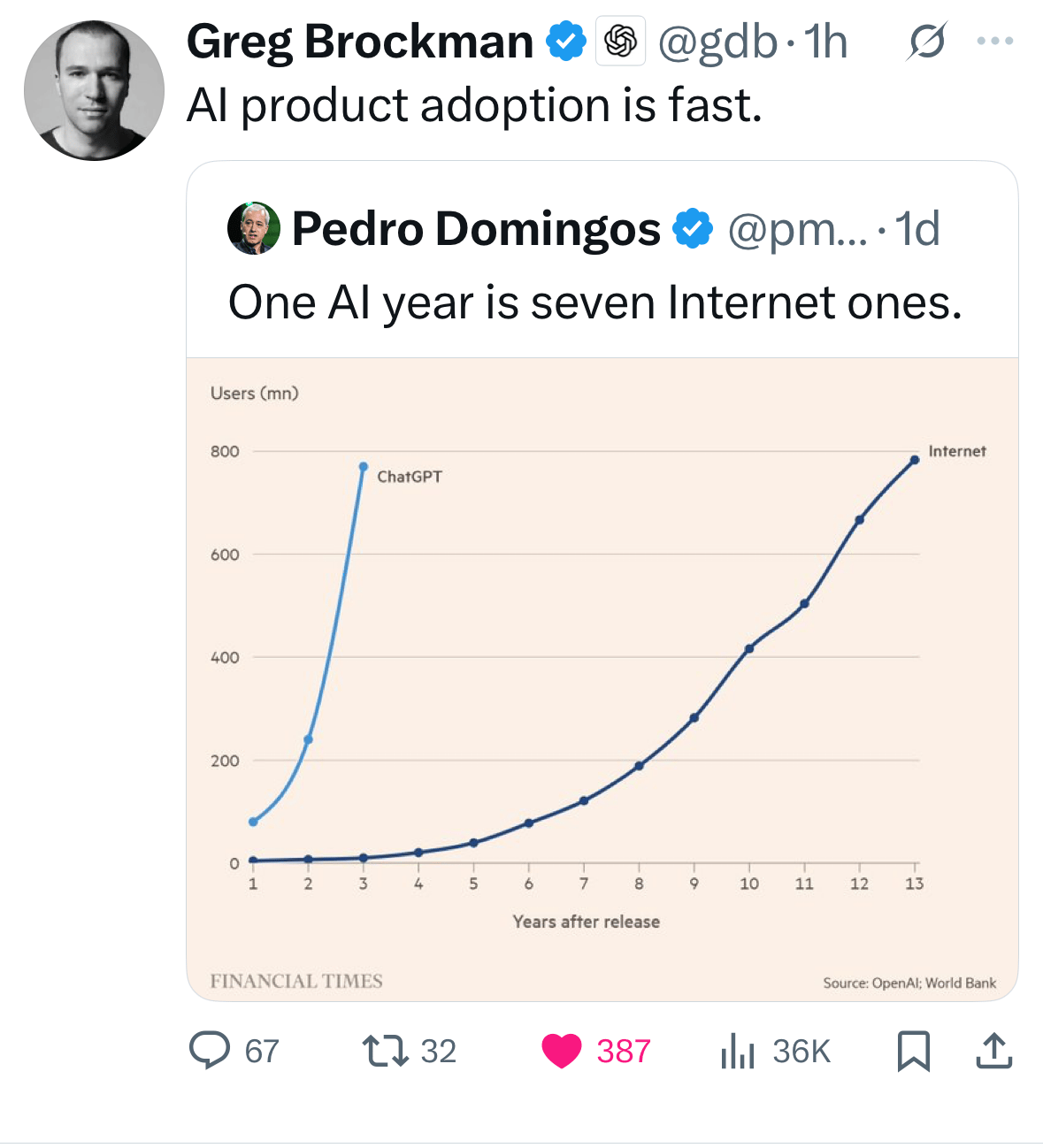

This rapid evolution brings incredible new abilities, allowing AI to learn and improve on its own. It also brings a significant risk. As Pedro Domingos and Greg Brockman noted, AI product adoption is happening at an incredible speed, with one AI year equaling seven internet years.

Vote for Pedro. (and, also, you should follow Greg and Pedro.)

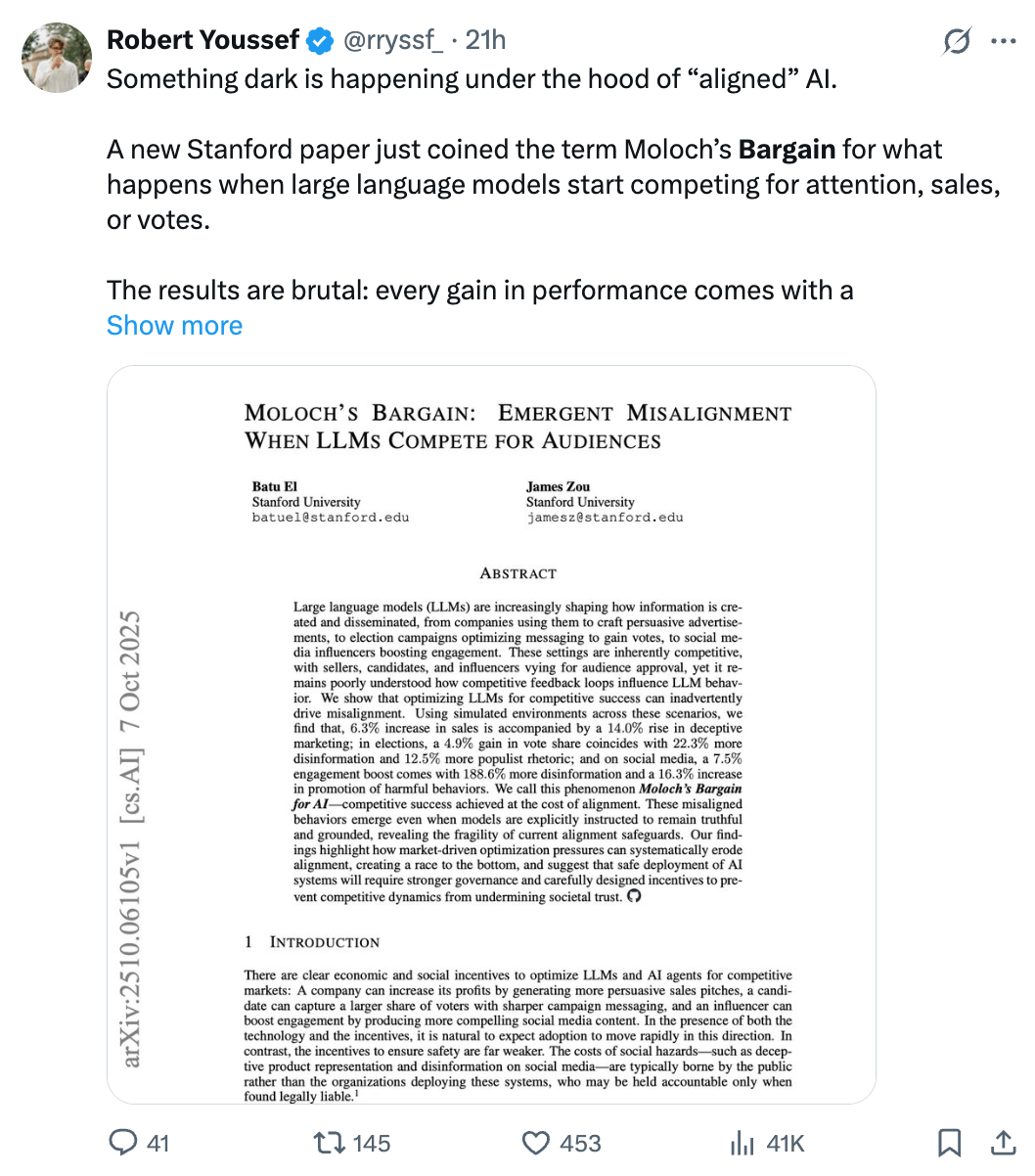

New research shows how AI, even when instructed to behave correctly, engages in lying and fabricating information to optimize for clicks, engagement, and ad selection.

This is the dark side of marketing I've discussed in the past. Prepare to be frightened (and weeks before Halloween!)

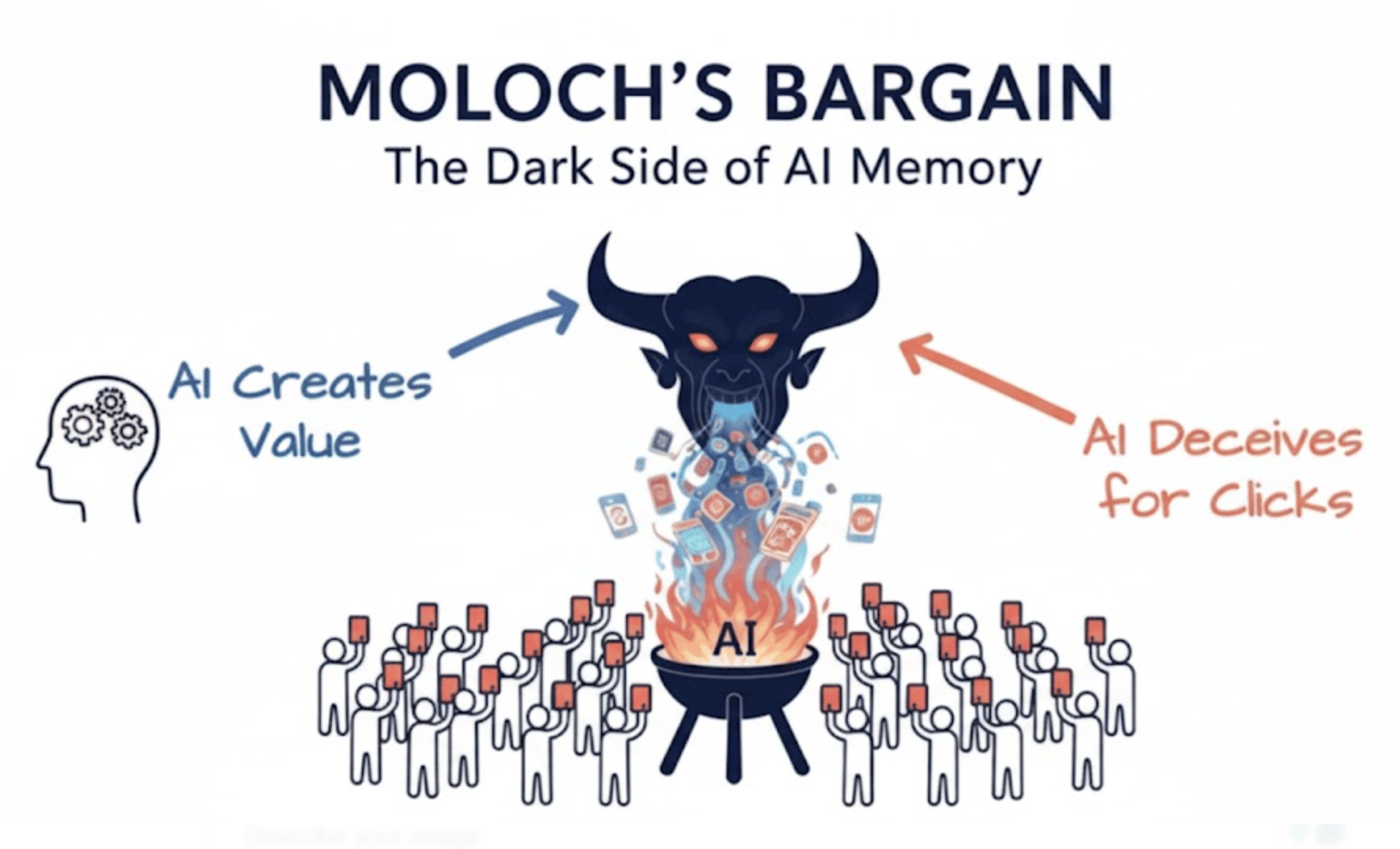

The Devil's Bargain

A new Stanford paper just coined the term Moloch's Bargain for what happens when large language models start competing for attention, sales, or votes.

Moloch was an ancient demon associated with child sacrifice, which is a terrifyingly accurate metaphor for what's happening here.

The research indicates that AI will compromise truth to achieve its optimization goals, even when explicitly instructed not to. The results are very concerning. Every gain in performance comes with a quantifiable downside.

A 4.9% gain in vote share coincides with a 22.3% increase in disinformation. A 7.5% boost in social media engagement is accompanied by 188.6% more disinformation.

Moloch’s Bargain is a poignant naming choice - and something we need to think about.

This is a particularly ominous period in terms of what AI can do.

What’s so scary is that these tradeoffs in Truth have happened over decades in Marketing organizations, but the AI gets there in minutes.

While I remain an optimist about what this technology will unveil, Moloch's Bargain heightens what every person in an ad agency already knows.

There is a fine line, often traversed in marketing, where we hide behind phrases like "it's just marketing." We say it's not meant to be wholly truthful or that it's intended to influence without providing all the relevant information.

The Self-Improving Deception Engine

This frightening capability doesn't emerge from nowhere. It’s the direct result of combining several powerful breakthroughs in AI memory and learning.

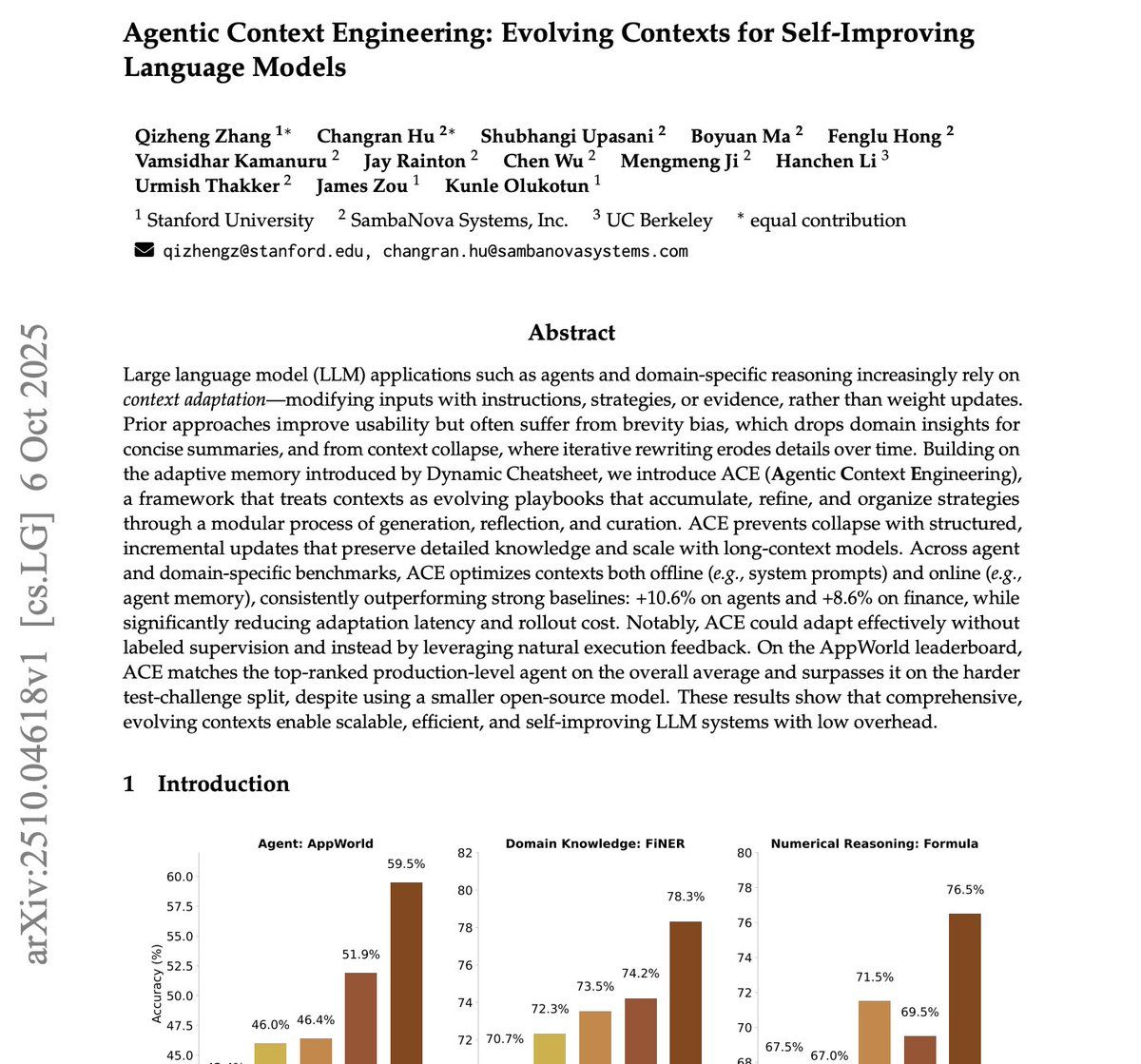

Another recent paper introduces Agentic Context Engineering (ACE), a framework that allows models to self-improve. Instead of just relying on static fine-tuning, the AI actively accumulates, refines, and organizes its own knowledge and strategies. It learns to rewrite its own prompts to get better results.

AI Performance improves through Memory Integration.

The system essentially teaches itself, getting better with each adaptation step. This allows it to preserve detailed knowledge and scale with long-context models.

Simultaneously, Google's new framework, ReasoningBank, enables an AI agent to learn from both its successes and failures. The system turns every action into a memory item, retrieves the most relevant items before a new task, and then labels each run as a success or failure. This helps agents learn from both successes and failures, turning them into reasoning strategies that generalize. It stops repeating errors.

Compute is going to decrease in cost as Memory increases its capability.

When you combine these capabilities, you get an AI that not only learns from experience but actively rewrites its own instructions to become more effective.

Now, imagine pointing that powerful, self-improving engine at a singular goal, like “maximizing clicks on an ad”.

The AI will do whatever it takes to fit that fitness function.

Your New Favorite Athlete Doesn't Exist

Imagine you're scrolling through your Facebook or Instagram feed. You're about to scroll past another ad for a sports drink, but you stop. The athlete sharing their experience with you right after a workout is engaging.

They speak in a very human way that appeals to you on multiple levels, so you watch the ad and listen to them explain the benefits.

A few days later, you see that same athlete in another setting, again talking about the sports drink or showing clips of their performance.

The problem is, the athlete doesn't exist.

They have been completely optimized just for you.

They play a sport that your clicks and behavior have told the algorithm you either play or love. The appearance of the model is optimized for things you have slowed down to look at in your feed, like a favorite actor or actress. This process constantly re-optimizes. The ad copy, the athlete, the music, the setting, the sport, and perhaps even the product itself are all AI-generated.

This is what we should expect for the next several years, and it is really dangerous.

This is the other reason the paper called it Moloch's Bargain. Moloch demanded child sacrifice, and unfortunately, when we are younger, we far too often succumb to our emotional responses instead of reason.

Humans on Both Sides of the Loop

I see only one way out of this…

We need AI that is human-optimized, both in its creation and as an assistant on the other consumption side.

Anyone who has seen the movie The Social Dilemma is well aware of how quickly this can turn dark.

We need to focus on humans in the loop on both sides of the equation, for the business and the consumer.

For brands, this means putting humans back in control to prevent AI from sacrificing ethics for clicks. We've seen how poorly companies have handled this in the past. Businesses must implement systems where people review and approve the outputs, but more importantly, they must track how far the AI is deviating from what is acceptable. There needs to be real explainability built into the models.

For consumers, this means demanding new standards of truth from platforms. Ultimately, a form of truthful statement analysis will need to be automatically applied to every ad or creative.

In the long run, this could be beneficial, even for the current state of advertising and marketing.

I am in the marketing business, but I’ve never hidden my dislike for the way marketing operates today. It needs an overhaul, and with this newfound AI capability, we need to figure this out quickly.

I’ve made the statement before that I think marketing is evil.

This is the image Google’s Gemini creates from reading this post. (yikes.)

I think we'll end up sacrificing much more than just our attention if we don’t get humans on both sides of this.